They work by constructing a Markov chain with an equilibrium distribution equal to the target probability distribution. MCMC algorithms are a class of sampling algorithms used to obtain a sequence of random samples from a probability distribution. Many optimization methods can be used to find the optimal parameters, including backpropagation (BP) algorithms, Markov chain Monte Carlo (MCMC) algorithms, evolutionary algorithms, and reinforcement learning. The DNN comprises complicated functions with lots of nonlinear transformations, so most optimization functions are non-convex functions with local optima and global optima. In order to achieve the right output, the parameters of the DNN need to be optimized. The final output is processed by other methods to solve real-world problems. This process continues until the output layer is reached. The output of the activation function is then fed as input to the node in the next layer. The inputs are multiplied by their respective weights and summed at each node, and the sum is transformed via an activation function. Most of the activation functions are nonlinear, such as sigmoid, ReLU, and tanh. The nodes of each layer are connected to the nodes of the adjacent layers by weights, and each node has an activation function. Compared to the earlier shallow networks, DNN consists of multiple layer of nodes, including input, hidden and output layers. A deep neural network (DNN) is a kind of deep learning technique that was initially designed to function like the human nervous system and the structure of the brain. Due to its significant advantages over traditional machine learning algorithms, deep learning has excellent performance in areas such as image classification, speech recognition, cancer diagnosis, rainfall forecast, and self-driving cars. In recent years, as the hottest branch of machine learning, deep learning has been playing an important role in our production and life.

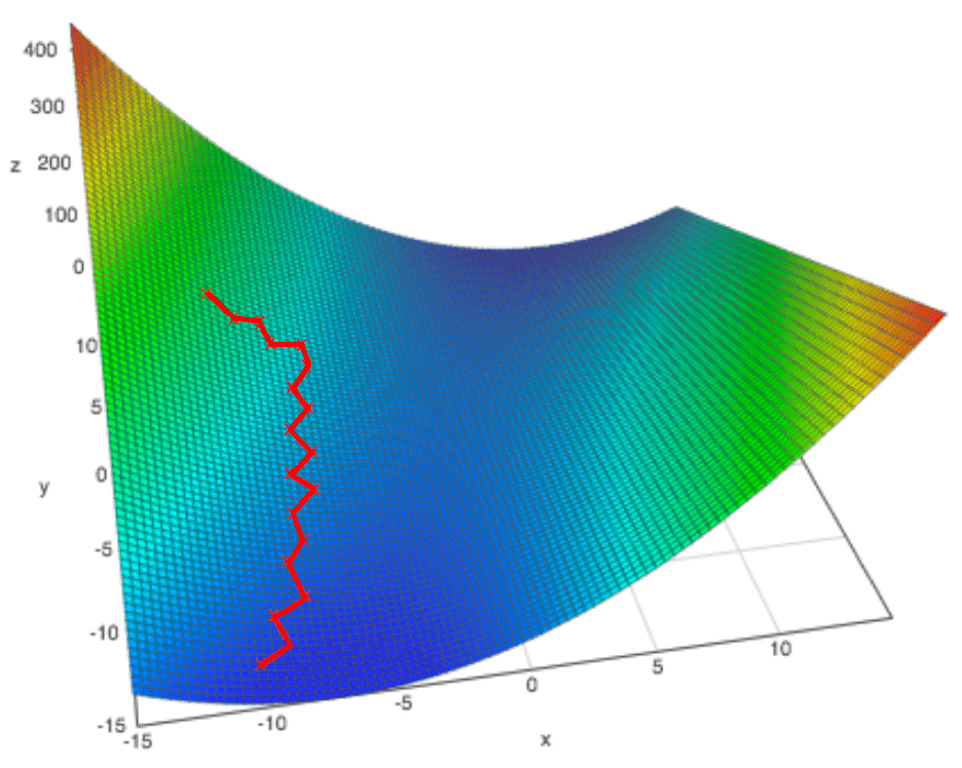

Experimental results over synthetic datasets show that it can find redundant nodes effectively, which is helpful for model compression. Experimental results over MNIST show that aSGD can speed up the optimization process of DNN and achieve higher accuracy without extra hyperparameters. Different from the existing methods, it calculates the adaptive batch size for each parameter in the model and uses the mean effective gradient as the actual gradient for parameter updates. Furthermore, we present a variant of the batch stochastic gradient decedent for a neural network using the ReLU as the activation function in the hidden layers, which is called adaptive stochastic gradient descent (aSGD). In this paper, we analyze the different roles of training samples on a parameter update, visually, and find that a training sample contributes differently to the parameter update. It often is a trial-and-error process to tune these hyperparameters in a complex optimizer. Some complex optimizers with many hyperparameters have been utilized to accelerate the process of network training and improve its generalization ability. Lots of effort has been put into training due to their numerous parameters in a deep network. With large data sets with millions of examples, and after a reasonable amount of iterations, the value of the cost function will be extremely close to the minimum that any differences will be negligible.In recent years, deep neural networks (DNN) have been widely used in many fields. Stochastic gradient descent does not always converge to the minimum of the cost function, instead, it will continuously circulate the minimum. Over time, the general direction of the stochastic gradient descent will converge to close to the minimum. However in stochastic gradient descent, as one example is processed per iteration, thus there is no guarantee that the cost function reduces with every step.

This takes less computational power compared to the batch gradient descent, which iterates through all the examples in a data set before aiming to reduce the cost function. Unlike batch gradient descent, which is computationally expensive to run on large data sets, stochastic gradient descent is able to take smaller steps to be more efficient while achieving the same result.Īfter randomization of the data set, stochastic gradient descent performs gradient descent based on one example, and start to change the cost function.

BATCH GRADIENT DESCENT SERIES

Similar to batch gradient descent, stochastic gradient descent performs a series of steps to minimize a cost function. Stochastic gradient descent is an optimization algorithm which improves the efficiency of the gradient descent algorithm.

0 kommentar(er)

0 kommentar(er)